Larger sets and higher associativity lead to fewer cache conflicts and. The block that causes the miss is located in the main memory and is loaded into the cache.

Cs Ece 552 2 Introduction To Computer Architecture

Direct Mapped Cache An Overview Sciencedirect Topics

What Are The Advantages Disadvantages Of Different Cache

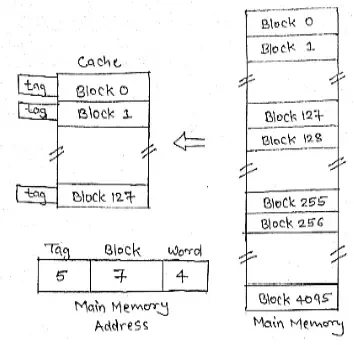

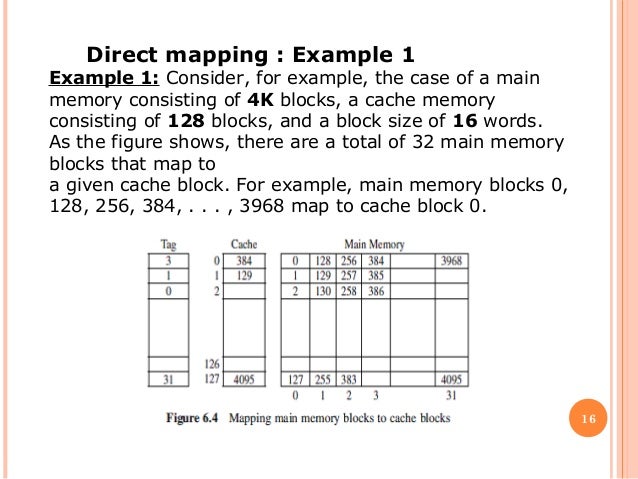

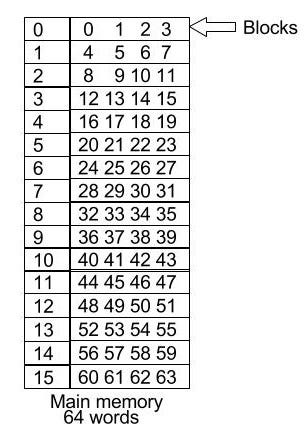

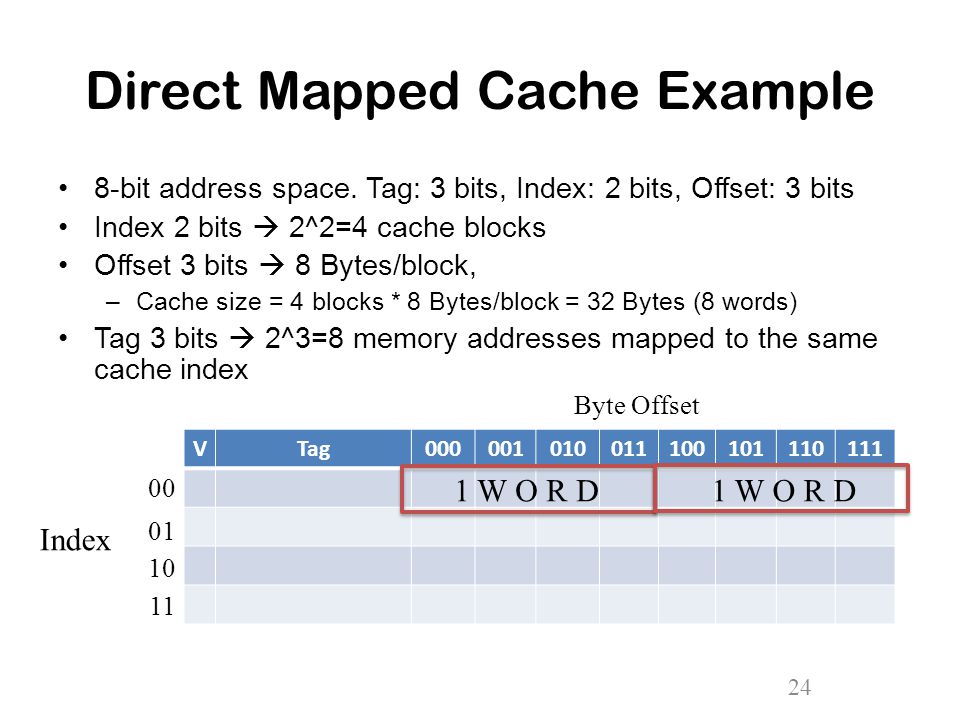

Lets assume that cache is 16 words in size and main memory is 64 words in size.

Direct mapped cache block size.

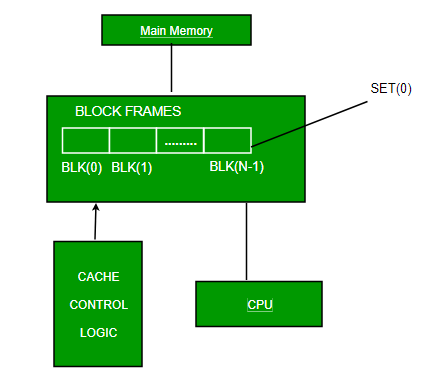

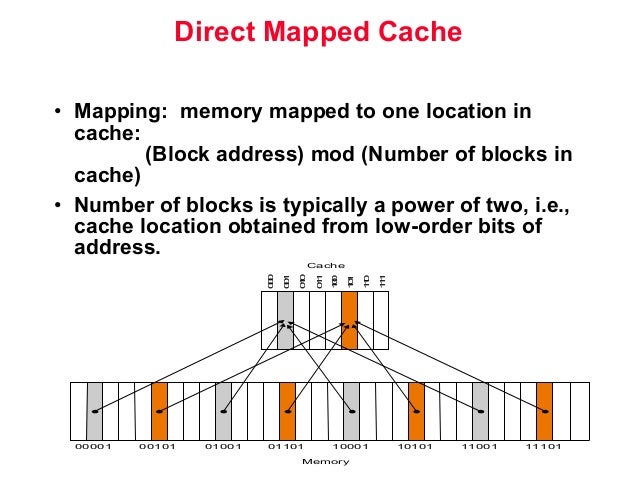

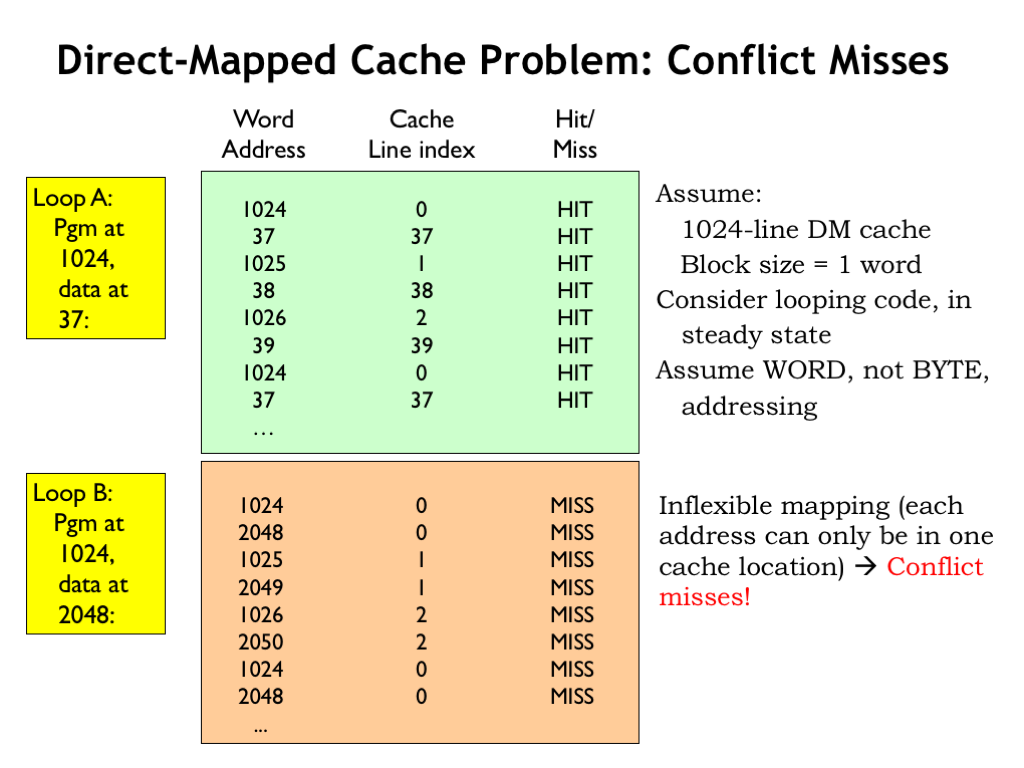

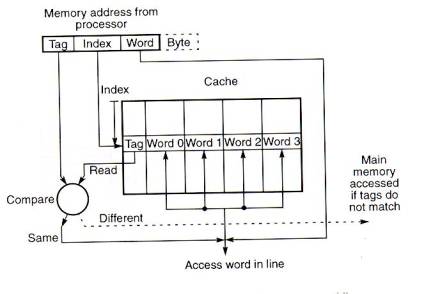

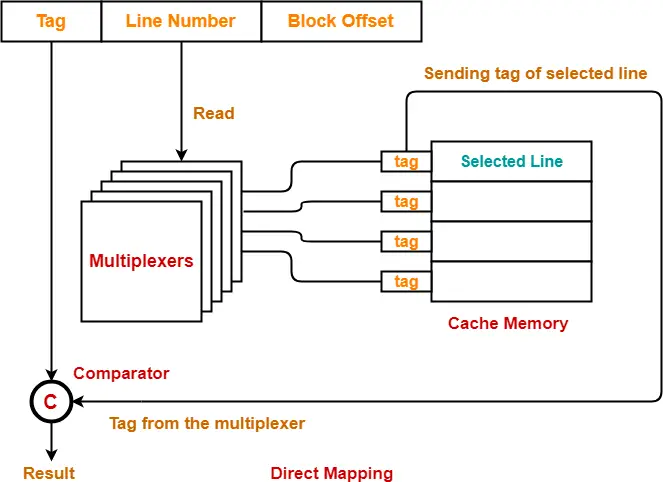

This scheme is called direct mapping because each cache slot corresponds to an explicit set of main memory blocks.

Set sizes range from 1 direct mapped to 2 k fully associative.

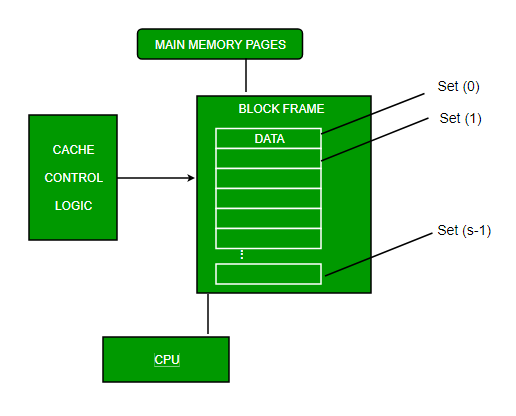

Cache set a row in the cache.

Miss rate vs block size vs cache size 10 8 kb 5 s s rate 16 kb 64 kb 256 kb 0 mi 16 32 64 128 256 block size bytes miss rate goes up if the block size becomes a significant fraction of the cache size because the number of blocks that can be held in the same size cache is smaller increasing capacity misses.

The number of blocks per set is deter mined by the layout of the cache eg.

The cache has eight sets each of which contains a one word block.

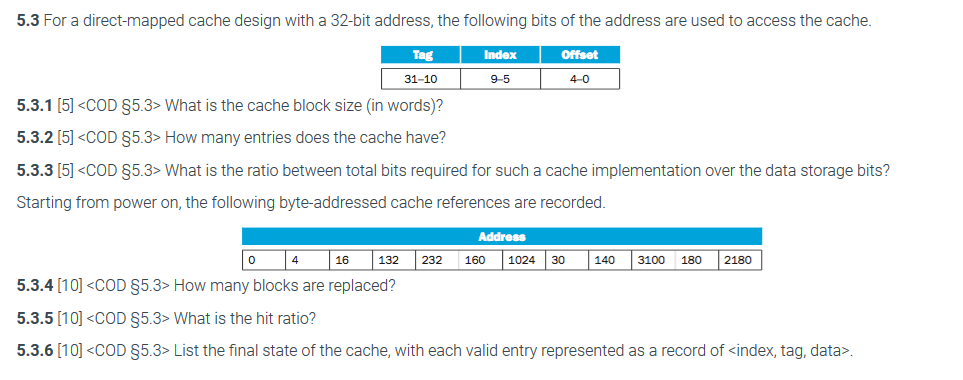

The number of bits needed for cache indexing and the number of tag bits are respectively.

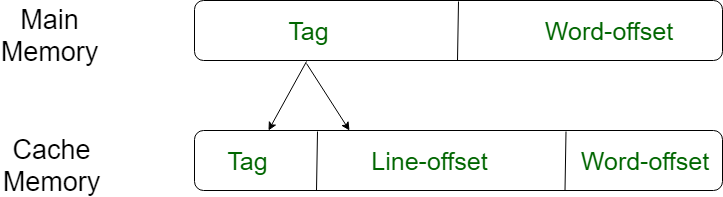

Because dierent regions of memory may be mapped into a block the tag is used to dierentiate between them.

Since each cache block is of size 4 bytes the total number of sets in the cache is 2564 which equals 64 sets.

Tag a unique identier for a group of data.

The cache has eight sets each of which contains a one word block.

The incoming address to the cache is divided into bits for offset index and tag.

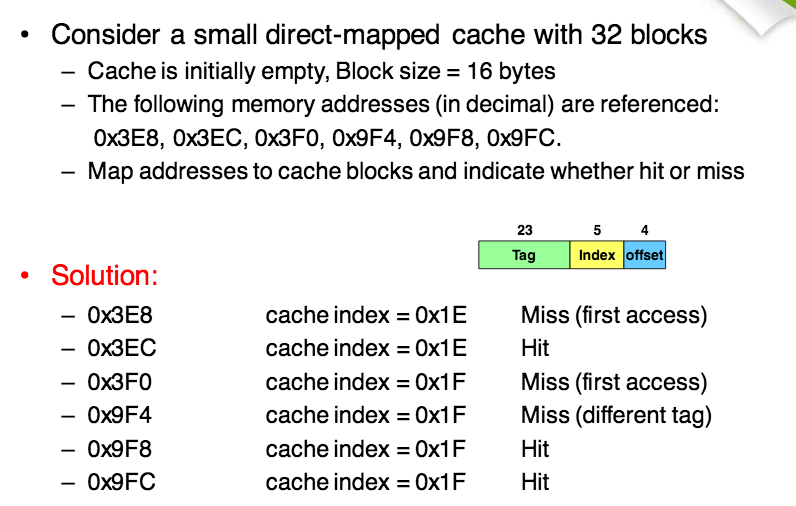

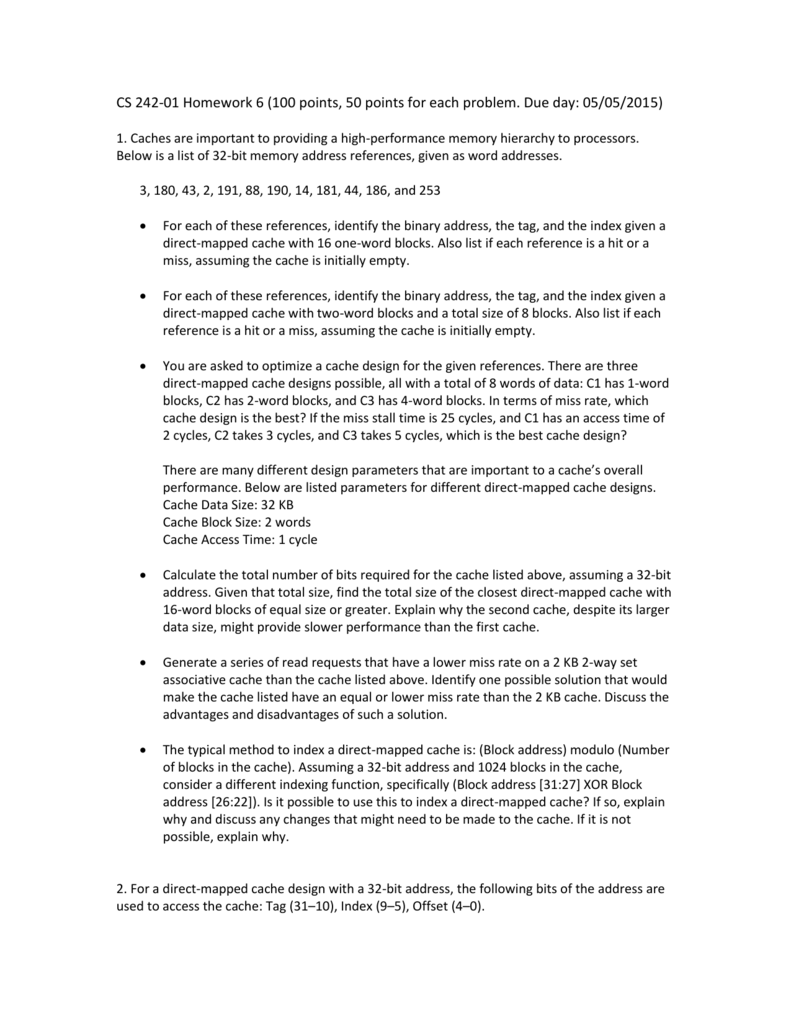

The cpu generates 32 bit addresses.

This mapping is illustrated in figure 85 for a direct mapped cache with a capacity of eight words and a block size of one word.

Direct mapped set associative or fully associative.

Associative caches assign each memory address to a particular set within the cache but not to any specific block within that set.

Consider a direct mapped cache of size 32 kb with block size 32 bytes.

Direct mapped cache consider main memory of 16 kilobytes which is organized as 4 byte blocks and cache of 256 bytes with block size of 4 bytes.

If a block contains the 4 words then number of blocks in the main memory can be calculated like following.

For a direct mapped cache each main memory block can be mapped to only one slot but each slot can receive more than one block.

Cache Solutions Cpu Cache Data

Solved Example On Cache Placement Misses Can Someone

Cache Memory In Computer Organization Geeksforgeeks

Cache O Design And Architecture Of Computer Systems

Cache Memory Placement Policy

Caches Microp

Direct Mapped Cache An Overview Sciencedirect Topics

Computer Architecture Cache Memory

Cache Memory In Computer Organization Geeksforgeeks

Cs61cl Lab 22 Caches

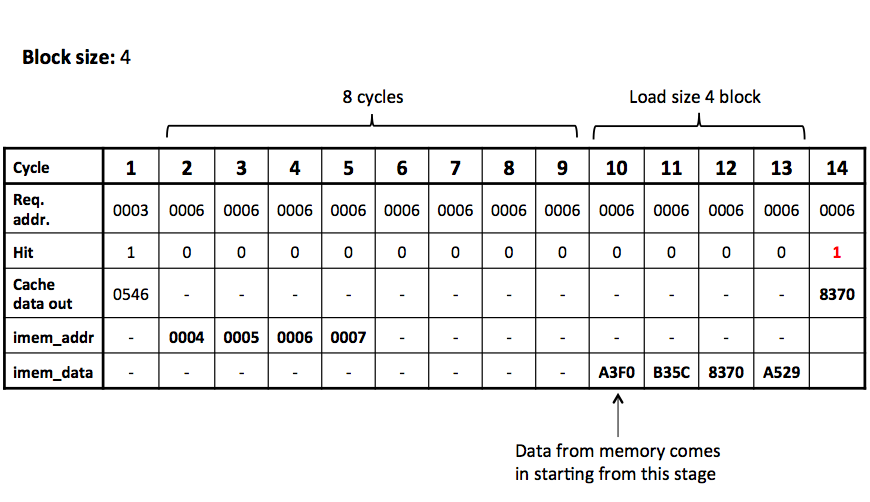

Lab 3 Lc4 Pipelined Processor With Instruction Cache

Mcs 012 Computer Organization By Ignou Mca Issuu

Direct Mapped Cache And Its Architecture

Lecture Notes For Computer Systems Design

Cache Memory In Computer Organization Geeksforgeeks

Direct Mapping Map Cache And Main Memory Break The Loop

Chapter 7 Large And Fast Exploiting Memory Hierarchy

Direct Mapped Cache And Its Architecture

Lecture 11 Cache Memories

L14 The Memory Hierarchy

Cache Address Mapping

Figure 9 From Sub Tagged Caches Study Of Variable Cache

Cmsc411 Project Cache Matrix Multiplication And Vector

Csci 4717 Direct Mapping Cache Assignment

Cache Design

Direct Mapping Direct Mapped Cache Gate Vidyalay

Figure 1 From Sub Tagged Caches Study Of Variable Cache

Solved 5 3 For A Direct Mapped Cache Design With A 32 Bit

Cache Direct Mapping Example Computer Architecture

1 Cmpe 421 Parallel Computer Architecture Part3 Accessing A

Cache

Direct Map Cache Gate Overflow

Direct Mapping Map Cache And Main Memory Break The Loop

Agenda Memory Hierarchy Direct Mapped Caches Cache

Lecture Notes For Computer Systems Design

Chapter 7 Large And Fast Exploiting Memory Hierarchy

Assignment6

Cache Memory Placement Policy

No comments:

Post a Comment